LLMs are capable of producing incorrect or misleading results with a confident and authoritative tone.

TRAINING TRANSPARENCY

Many AI companies have AI crawlers that operate without transparency, potentially monetizing content without permission or compensation.

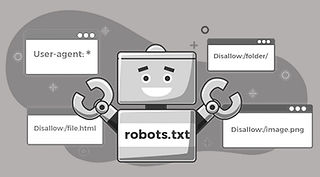

AI Crawlers: bots that gather data from websites to train large language models (LLMs). They collect content like text, articles, and images to generate answers, without sending visitors to the original source

Example: Robots Exclusion Protocol, also known as Robots.txt, tells web robots which parts of a site they can and cannot have access to.

Example: ChatGPT and Perplexity in some instances answered queries incorrectly about publishers that permitted access while other times the chatbots answered queries correctly about publishers who did not permit access.

AI Search Has a Citation Problem - Columbia Journalism Review

HALLUCINATIONS

Hallucinations can involve inventing facts, misattributing sources, or producing plausible-sounding misinformation.

Hallucinations: the phenomenon where the AI model generates incorrect or nonsensical information, despite having access to a large dataset.

Example: My Pillow CEO Mike Lindell's lawyers violated court rules when they filed a document filled with more than two dozen mistakes — including hallucinated fake cases made up by AI.

A Recent High-Profile Case of AI Hallucination Serves as a Stark Warning - NPR

Example: As AI models are integrated into society and used to help automate various systems, the tendency to hallucinate is one factor that can lead to the degradation of overall information quality and further reduce the veracity of and trust in freely available information.

OpenAI releases highly anticipated GPT-4 model (but beware hallucinations) - TechFinitive

SHORTCOMINGS

Chatbots often fail to correctly cite information correctly.

Example: In 60 percent of the queries, incorrect answers were provided. Perplexity had 37% of answers wrong.

AI Search Has A Citation Problem - Columbia Journalism Review

Example: More than 50% of the responses that presented citations from Grok 3 and Gemini were fabricated or broken URLs.

AI Search Has A Citation Problem - Columbia Journalism Review